Bonus Lab: Advanced Pipeline Customization and Artifact Management

Congratulations on reaching this point! You’ve successfully learned the fundamentals of working with pipelines and managing artifacts. To take your skills to the next level, we present a Bonus Lab that will help you explore more advanced topics in pipeline customization and artifact management.

This is an optional section intended to deepen your understanding, and while it’s not required to complete the bootcamp, it will provide valuable experience and additional tools for real-world AI pipeline workflows.

Pre-requisites

Make sure you have completed the main pipeline module and are familiar with:

-

The basics of pipeline creation and artifact management.

-

How to configure and store artifacts using default tools. Basic Python scripting and command-line interaction.

Build and Deploy an Airflow Pipeline with Elyra

Pre-requisites:

-

Fork this repository - https://github.com/red-hat-data-services/telecom-customer-churn-airflow

-

Airflow running in the cluster - https://ai-on-openshift.io/tools-and-applications/airflow/airflow/

To build and deploy an Airflow pipeline with Elyra, you need to follow these steps:

-

Add Elyra custom notebook image - quay.io/eformat/elyra-base:0.2.1

Review the lesson to add custom notebook image to RHOAI

-

Create workbench with custom-elyra image

-

Add Data Connection

You can use deployed Minio instance

-

Clone fork of git repository - https://github.com/<YOUR-ID>red-hat-data-services/telecom-customer-churn-airflow

-

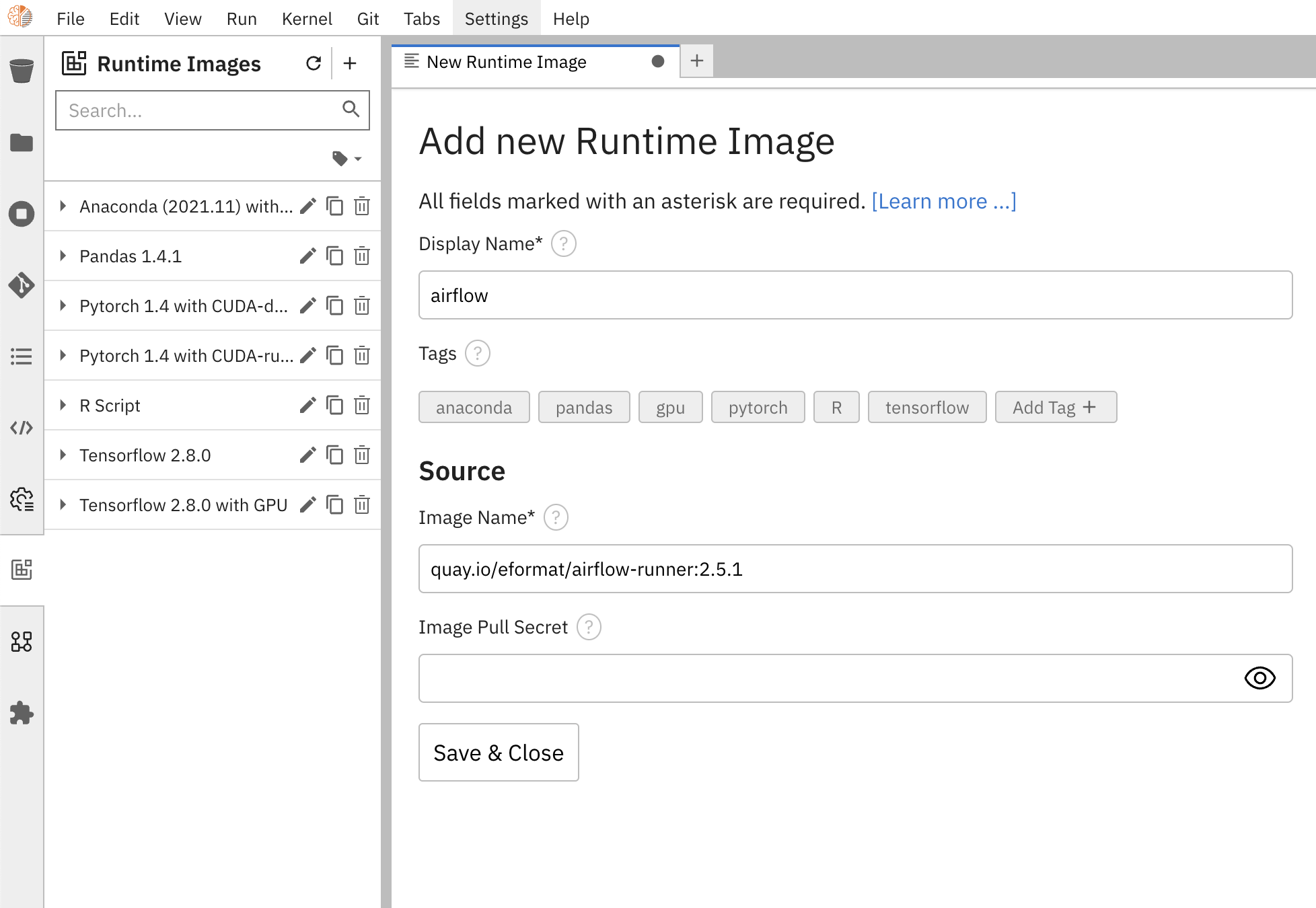

Configure Elyra to work with Airflow. Add custom runtime image - quay.io/eformat/airflow-runner:2.5.1

Solution

-

Create a runtime configuration

Solution

-

Display Name: airflow

-

Airflow settings:

-

Apache Airflow UI Endpoint: run oc get route -n airflow to get the route

-

Apache Airflow User Namespace: airflow

-

-

Github/GitLabs settings:

-

Git type: GITHUB

-

GitHub server API Endpoint: https://api.github.com

-

GitHub DAG Repository: https://github.com/<YOUR-ID>red-hat-data-services/telecom-customer-churn-airflow

-

GitHub DAG Repository Branch: Your branch

-

Personal Access Token: A personal access token for pushing to the repository

-

-

Cloud Object Storage settings: Minio Storage details

-

-

Test and run the DAG - https://github.com/<YOUR-ID>red-hat-data-services/telecom-customer-churn-airflow