Bootstrap the AI Accelerator Project

In this section we will execute the bootstrap installation script found in the AI Accelerator.

| Note the term "bootstrap" rather than "install", since this script simply sets up the bare minimum components such as ArgoCD and thereafter ArgoCD takes over to perform the remainder of the GitOps process to install the rest of the software stack, including RHOAI. |

(Optional) Create a Fork of the AI Accelerator Project

It’s highly recommended that you create a fork of the AI Accelerator project, which gives you a copy that you can customize and manipulate as desired.

-

Navigate to the project: https://github.com/redhat-ai-services/ai-accelerator

-

Click the "fork" button in the navigation bar

-

Select the Owner. This is typically your personal GitHub account, but could be an organization if desired.

| You can see who else has forked the repo by clicking the forks link in the "About" section. It’s interesting to see who else is using this accelerator project! |

Clone the AI Accelerator Project

Clone (download) the Git repository containing the AI Accelerator, since we will be running the bootstrap scripts from your local machine.

| If you can’t or prefer not to run the installation from your local machine (such as in a locked down corporate environment), you can also use the Bastion host instead. This is a Linux virtual machine running on AWS, the SSH login details are provided in the provisioning email you received from demo.redhat.com. Just be aware that the Basion host is deprovisioned when the cluster is deleted, so be sure to git commit any changes frequently. |

-

Git clone the following repository to your local machine. If you’re using a fork, then change the repository URL in the command below to match yours:

git clone https://github.com/redhat-ai-services/ai-accelerator.gitBootstrap the Demo Cluster

Carefully follow the instructions found in documentation/installation.md, with the following specifics:

-

Use the Demo cluster credentials when logging into OpenShift

-

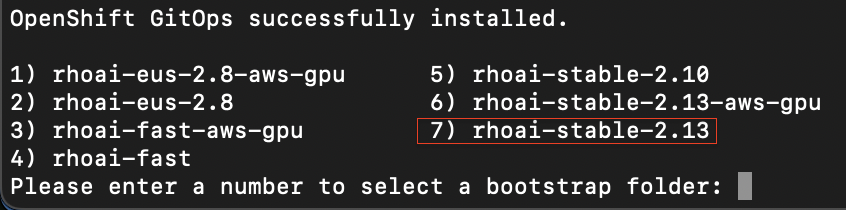

Select number 7 - stable-2.13 when prompted:

This will install all the applications in the bootstrap script and also provide a openshift-gitops-server (ArgoCD) link. Option 3 and 6 will install RHOAI and related operators. Since we are using GPUs for the demo instance, it will also install the Nvidia GPU Operator and the Node Feature Discovery (NFD) Operator.

-

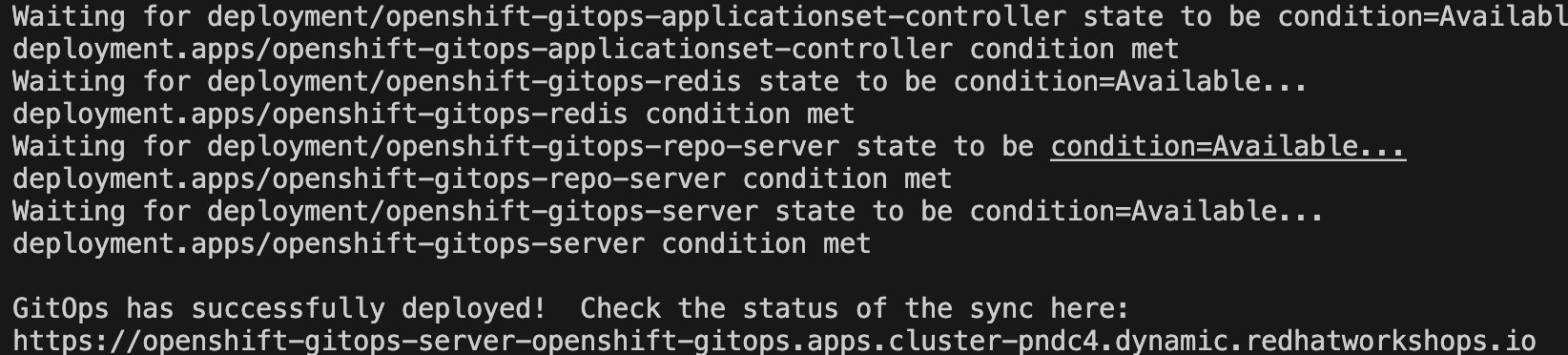

Log into the Argo CD link with the Openshift credentials and wait till everything syncs successfully. image::Argo_home_screen.png[]

This will take around 25-30 minutes for everything to provision and start up. You can monitor the status of all the components in the ArgoCD console.

| Once provisioned, the project will create a link to ArgoCD in the Applications menu of OpenShift. However you can also copy the ArgoCD URL from the terminal once the bootstrap script has completed. |

This GPU overlay also uses MachineAutoscalers. Since there are Inferencing Service examples that use GPUs, a g5.2xlarge machineset (with GPU) will spin up. This can take a few minutes.

|

If the granite inference service fails to spin up, delete the deployment and Argo should redeploy it. oc delete deployment granite-predictor-00001-deployment -n ai-example-single-model-serving |

We will cover the ai-accelerator project overview in a later section.

Continue using the DEMO cluster for the subsequent exercises.

Questions for Further Consideration

Additional questions that could be discussed for this topic:

-

What’s the difference between "bootstrapping" and "installing" the new OpenShift cluster?

-

Why is forking an open source project a good idea?

-

How can a project fork be used to contribute back to the parent project with bug fixes, updates and new features?

-

Could, or should the bootstrap shell script be converted to Ansible?

-

How does the bootstrap script provision GPU resources in the new OpenShift cluster? Hint: a quick walk through the logic in the source code should be a useful exercise, time permitting.

-

Where can I get help if the bootstrap process breaks?