Deploy vLLM on OpenShift AI with kserve and model car

-

Log into your provided OpenShift environment.

-

Once in your OpenShift cluster setup a new project for your work.

oc new-project rhaiis-demoWe will use a helm chart to deploy our model-car on vLLM in OpenShift AI. This helm chart is designed to be easily reusable and we recommend starting with this base for your deployment. You may also substitute the official Red Hat AI Inference Server image in the helm chart deployment on a standard OpenShift cluster. For the purposes of this training experience, we are using the vllm-kserve image on OpenShift AI.

If you do use the RHAIIS image as seen in the previous module, you will run into the authentication requirements required for pulling the image and it would add unnecessary barriers to the training experience.

You may view the helm chart at this link: https://github.com/redhat-ai-services/helm-charts/tree/main/charts/vllm-kserve and within our workshop repository under etx-llm-optimization-and-inference-leveraging/workshop_code/deploy_vllm/vllm-kserve.

Let’s take a look at our provided custom values file (etx-llm-optimization-and-inference-leveraging/workshop_code/deploy_vllm/vllm_rhoai_custom_1/values.yaml):

deploymentMode: RawDeployment

model:

uri: oci://quay.io/redhat-ai-services/modelcar-catalog:granite-3.3-8b-instruct

args:

- "--max-model-len=130000"

- "--enable-auto-tool-choice"

- "--tool-call-parser=granite"

- "--chat-template=/app/data/template/tool_chat_template_granite.jinja"You may substitute the modelcar for a different model and adjust the arguments as desired using vLLM standard args: https://docs.vllm.ai/en/stable/configuration/engine_args.html#named-arguments. View our available modelcars here: https://quay.io/repository/redhat-ai-services/modelcar-catalog?tab=tags

If you choose to use a different model you’ll need to ensure to change the tool-call-parser and the chat-template fields appropriately.

-

--tool-call-parser:

See available options here: https://docs.vllm.ai/en/latest/cli/index.html#-tool-call-parser

-

--chat-template:

See available chat templates here: https://docs.vllm.ai/en/stable/features/tool_calling.html

Understanding the tool-call-parser and chat-template features

The --tool-call-parser and --chat-template arguments need to align with the model because they define the specific input and output formats that the model was trained on and expects during inference. Here’s a more detailed explanation:

-

Chat Template (--chat-template):

Purpose: A chat template is a Jinja2 template that structures the conversation history into a single string that the model’s tokenizer can process. Different models are fine-tuned on different chat formats. For example, a model might expect messages to be delimited by specific tokens like [INST] and [/INST] for user turns, or [SYS] and [EOT] for system messages.

Why Alignment is Crucial: If you use a chat template during serving that is different from the one used during the model’s training, the model will receive an input string that it doesn’t recognize as a valid conversation. It won’t correctly interpret the roles of the speakers (user, assistant, system) or the boundaries between messages. This will lead to:

Nonsensical Responses: The model might generate garbled or irrelevant text because it’s trying to make sense of an unfamiliar input structure.

Failure to Follow Instructions: It might ignore system prompts or struggle to maintain conversational coherence.

Incorrect Tokenization: The tokenizer might produce a different sequence of tokens than intended if the template’s special tokens or formatting are not consistent with what the model expects.

-

Tool Call Parser (--tool-call-parser):

Purpose: For models that support function calling (i.e., generating structured outputs to use external tools), a tool call parser is responsible for extracting these structured calls (often in JSON format) from the raw text output generated by the model. The model is specifically fine-tuned to produce tool calls in a very particular syntax and format.

Why Alignment is Crucial: If the --tool-call-parser during serving doesn’t match the format the model was trained to produce, the system won’t be able to correctly identify and parse the tool calls. For instance:

Parsing Failures: The parser might fail to extract any tool calls, even if the model did generate them, because it’s looking for a different structure or syntax.

Incorrect Tool Calls: It might incorrectly parse parts of the model’s output as tool calls, leading to errors or unintended actions when those "calls" are executed.

Loss of Functionality: The entire tool-use capability of the model becomes effectively unusable if the parser cannot correctly interpret the model’s tool-calling output.

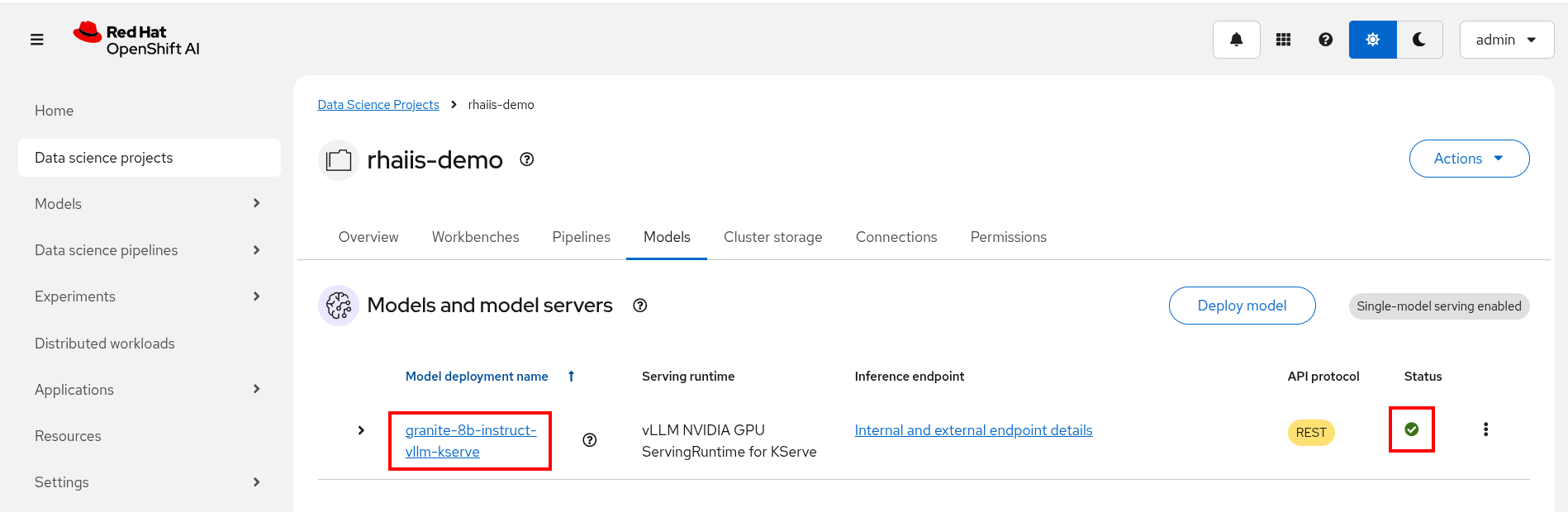

Deploy the vLLM model car chart

Run the following commands to deploy our vLLM-kserve helm chart. The chart version we are using is published, but we will be deploying it from our cloned repository so that we may view files and make any changes if desired.

OPTIONAL: Before deploying, adjust your values.yaml file as you desire as described in above sections.

oc create namespace rhaiis-demo

helm install granite-8b-instruct redhat-ai-services/vllm-kserve --version 0.5.11 \

-f workshop_code/deploy_vllm/vllm_rhoai_custom_1/values.yaml|

If you didn’t add the redhat-ai-services helm chart repository to your local helm client, you can do so by running the following command: |